When AI Becomes the System

Bureaucracy was built with human limits in mind. Advances in AI mean those limits could disappear.

A few weeks back, I was sitting down with three members of my previous team for karaoke and beers. We were mourning my imminent departure from the role that had hardened the realisation that complex systems, in this case a large company, needed to consider values in their decision-making processes. They asked me what I was planning to do next, I told them I was going to take a break from corporate to try to figure out the civilisational design principles that we’re going to need to survive the 21st century. They rolled their eyes, laughed, and said “of course you are”, but then mentioned that if I decided to run another threat intelligence team, they’d love to work with me again. Their main reason was the time I was willing to put into helping them to develop their skills. The comment meant a lot to me.

Developing people is the most fulfilling part of the roles I’ve had over my career. To this day, the best piece of feedback I ever received was from a graduate who told me that I had created an environment where she felt like it was safe to fail. I spent years teaching intelligence while working for the government, from time to time I bump into former students at conferences to discover that they’re now senior executives in Australia’s national security apparatus. That success is down to them, their hard work, dedication, and resilience. But I know that the cumulative influence that I’ve had on others, the fraction-of-a-percentage nudge that I’ve given each of them to help enable their success, is greater than anything I’ll achieve as an individual contributor. It’s why I love helping others to succeed.

I also love artificial intelligence. This project wouldn’t be possible without it. I use a range of frontier models to develop the concepts that I’ve been writing about. It’d likely have taken five times longer if I were relying on traditional search technologies to expand concepts outwards, find related reading materials, distil ideas down into their fundamental thought lines, and edit my writing so that I can convey the messages in a comprehensible way. It might not have been possible at all without them.

I also use AI heavily in my work as an intelligence analyst. I first encountered natural language processing some time over a decade ago and began messing around with image classification a little after that. AI excels at working through the processing phase of the intelligence cycle because it can take unstructured data and transform it into information suitable for databasing. From there it can be aggregated, synthesised, analysed, and expressed so that its relevance to a decision maker becomes clear.

Intelligence as a discipline is very well suited to adopting artificial intelligence in its workflows. It’s heavily systematised with cognitive tasks being performed by specialised analysts throughout the intelligence cycle.

One of the most challenging aspects of intelligence is the volume of data you need to sift through to cover a target set. You can’t read everything, that’s why you have these enormous intelligence agencies where information is processed up through levels of information, traditionally by humans.

As an experiment, in 2023 I built a tool that writes information reports from Telegram posts, mostly focussing on the politically motivated hacking groups, or hacktivists, and the Russia-Ukraine war. Telegram is great because you can use Python packages to retrieve posts from channels at no cost. It’s also full of people very happy to talk about the sketchy things they’re getting up to.

The tool takes the Telegram post and sends it to a large language model with some very detailed system instructions. It then reads the raw intercept in its native language and cultural context, checks to see whether the post meets intelligence requirements, produces a short information report which transforms the casual language into a high-quality standard, writes an analyst comment, extracts the key entities, and pushes the information into a database. That’s the job of a linguist, a reporter, and an analyst, all in one call. The average price point I was paying was $0.003 for a single API call of about 3000-5000 tokens, most of which were system prompts teaching the LLM how to write information reports. It means I get about 300 reports for a dollar. Even using GPT3.5 I was getting outputs that matched the quality I would expect of a junior analyst. These days I use Gemini 2.0 Flash, the short reports are better than what I can produce myself.

Refining the system prompts and building the data pipelines took time, maybe a week of labour over several months of tinkering, along with occasional improvements over time. It took me two decades to get to the point where I could produce this system, but the work itself was surprisingly easy. Training a good intelligence analyst takes years. I still think that the analysis and production should be kept in human hands, but some of the recent AI assessments I’ve produced using agentic workflows in top reasoning models is making me reassess that position. But for the lower-level tasks, triage and simple analysis and production, AI is already outperforming junior analysts.

The problem I have today is that I can get an LLM to do a lot of the work I’d be asking of a junior analyst. It’ll do it faster, cheaper, and will produce more consistent output. Most of the tasks in the intelligence cycle can be performed by AI already. I’ve had it write reports, produce and refine requirements, contextualise intelligence to tech stacks, and identify stakeholders who need to receive intelligence. I started a talk at a recent conference presentation by saying that in ten years, almost certainly less, all that will be left will be intelligence managers, intelligence communicators, and some highly technical specialist analysts. And the only reason they’ll still exist is because risk owners will want them to be human.

So, what does that mean for my passion for developing junior analysts? Maybe it’s time for me to shut down the laptop, disappear into the mountains, and teach meditation instead.

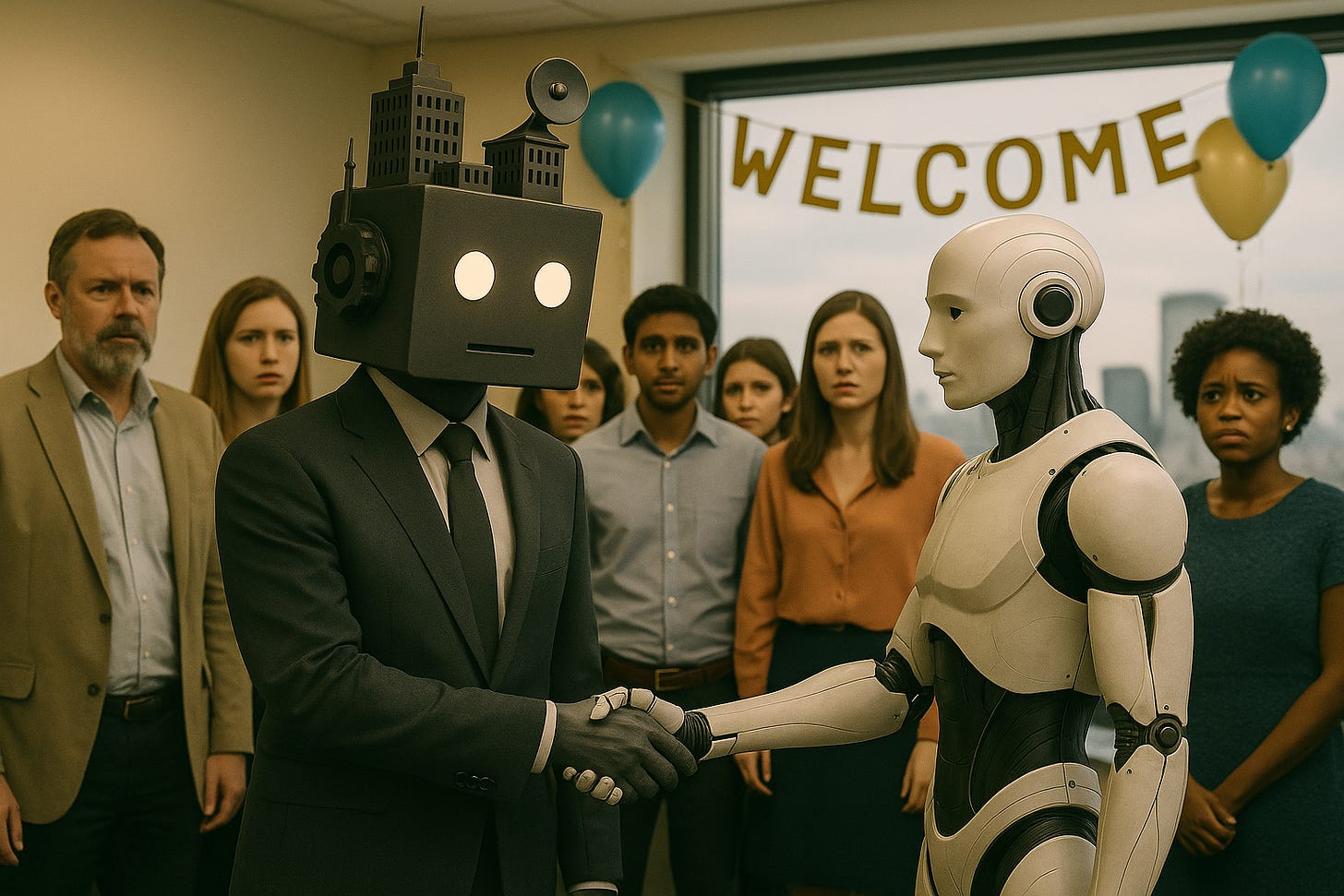

AI can become a better system

Here is the thing: it’s coming for all our white-collar jobs. If you’re a node in a bureaucratic process, AI will eventually be able to do your job better than you can. There are caveats: AI needs to have the context and experience that you have, and you need to understand the processes well enough to translate it into something that AI can comprehend. Fundamentally though it’s about understanding the logic behind your role well enough to teach it to an AI.

I’m not convinced that’s a bad thing. Well, it is a bad thing, because it’ll be massively disruptive to a huge portion of the population. We’ll need to rethink our priorities, our economy, our values, and our worldview. But when it comes to achieving the goals that these systems are trying to meet, it is highly likely that AI will do a better job than human organisations have been able to achieve.

I should probably take the chance to provide a definition at this point. Bureaucracy is often conflated with public services, as in the organisations which are part of the executive government and provide a service in response to a public goal. But bureaucracy, in the formal sense, is a system of organisation that is characterised by a hierarchical structure, rule-based decision-making, role specialisation, standardised processes, and impersonality. These features are designed to create predictability, accountability, and scalability. The military is the earliest and most complete example of this, but it also applies just as easily to banks, airlines, telcos, and manufacturing firms.

I’ve been inside bureaucratic systems for my entire career. For the most part I’ve worked with good, smart people. Despite that, there isn’t often a lot of questioning of why we perform the tasks that we do day to day. I have an alignment chart for those-who-have-seen-the-insanity-of-bureaucratic-systems-and-responded-as-best-they-can that I’ll share in a few weeks, if only to vent my own frustration. I tend to default to the insider reformer archetype. After a while it wears you out…

There is a lesson I’ve learned from teaching AI how to do intelligence that is broadly applicable to bureaucracy everywhere. In the earliest experiments I was doing with bulk intelligence processing, pre LLMs, I’d use a workflow that might call a language translation API, return English language text, then separately perform some kind of entity extraction using a local text classifier. The data extraction and formatting would be done using deterministic rules which mostly worked, but there would be patchwork processes to catch the outliers. At that time, I couldn’t automatically write the reports, the technology simply wasn’t available.

These are all important steps in a process, things that individual analysts might have done. It was intuitively sensible for me to design my early AI-assisted processes in a way that mirrored the way I ran my teams. But as the technology changed, so did my approach. During the early experiments with my Telegram intelligence processor, I’d separate out the requirements triage, report writing, and entity extraction. It’s how I’d been taught to do the job. Eventually though I learned that I could get the same result by bundling up all my tasks into a modular prompt and passing it all to a mid-range model. There were no fine-tuned models, no tool using agents, just really good instructions running in a FOR loop.

The lesson wasn’t just about automation. It was about assumptions. I’d internalised a structure based on how humans work. We have linear tasks, strict handovers, clear divisions of labour. But AI doesn’t need those boundaries. When you compress a process into a single pass, it forces you to ask why we have all that structure. What if the system didn’t need to manage human labour, it just needed to achieve the goal?

Bureaucracy exists to manage human cognitive, behavioural, temporal, and communication limits. AI doesn’t have those. So, the real opportunity isn’t automation, it’s alignment. Once you collapse the scaffolding you can design for what matters.

Artificial intelligence has the potential to compress bureaucracy. It will probably eventually eliminate it all together. All you’ll have left will be goals, constraints, resources, and the interface to the humans and other intelligences that are stakeholders in the system.

Once that happens, values alignment becomes trivial.

Embedding values into AI-enabled processes

OK so I’m going to walk that one back straight away. Nothing about this is trivial. Even building out the frameworks for how you establish values is enormously challenging, much less building systems to facilitate alignment. But artificial intelligence does make values alignment possible, and that might be enough.

From a values alignment perspective, an AI-enabled system brings a very different set of capabilities to decision-making. It can take in the whole context at once, rather than relying on the partial and often compressed signals that humans have to interpret under time and information constraints. This makes it possible to baseline one situation against many others, testing for fairness and consistency across cases in a way that human judgement often struggles to replicate. It can also communicate its reasoning in ways that respect human agency. This might include presenting decisions with clarity, providing justifications suitable for the context and understanding of the human participant, providing opportunity for engagement without resource constraints, and objectively offering pathways for further options for a person to have their needs met. It can do all of this while delivering those decisions far more quickly than traditional processes allow.

Once such a system has built a sufficient understanding of the processes it’s working with, the inputs, the outputs, and the steps in between, it can go further. It can eliminate unnecessary steps, identify additional diagnostic information points, and even reconsider the underlying logic used to achieve its purpose. Crucially, it can do all this while incorporating explicit values into its decision-making process, ensuring that efficiency gains are not made at the expense of fairness, agency, consent, dignity, or ethical alignment.

Instead of filling out a series of forms, imagine sitting with an AI agent who talks through your issue or objective, taking your conversation and any artefacts and parsing out the information it needs to provide advice or make a decision. Where there are gaps, it can ask for more information or refer the user to a specialist, such as a doctor, engineer, environmental scientist, or accountant, to provide the evidence needed. It could even handle the appointment bookings itself. Navigating complex bureaucratic processes is enormously challenging for most people. It’s why we employ specialists to help people through these systems.

When AI can collapse a process end-to-end, it doesn’t just remove inefficiency, it opens the door to new kinds of feedback. Right now, our feedback loops are slow and focussed on the past: laws and compliance checks trigger only after harm has occurred. But an AI-enabled system can run continuous moral diagnostics. It can flag when a process contradicts an articulated value. It can measure outcomes not only for efficiency, but for fairness, agency, and empathy.

In other words, AI doesn’t just replace bureaucratic machinery. It gives us the chance to embed values-aware feedback loops that our existing systems were never designed to support.

The ultimate end state of this bureaucratic compression is an elimination of bureaucracy as we understand it. An aligned AI-enabled system will simply have a values-aligned objective, resource constraints, a set of people or agents to which the objective is applicable, and the flexibility to continuously adjust its methods in response to values-based feedback. It can take the intent of a program or policy and apply it to the unique context of the individual humans it’s trying to support.

This isn’t just an abstract exercise. Misaligned systems have costs that compound over time. Things like institutional paralysis, moral dissonance, and “moral laundering,” where symbolic compliance replaces genuine adaptation. These costs aren’t always visible until they erupt in public distrust or systemic crisis. AI gives us a chance to surface these hidden costs in real time and to design systems that adapt before the damage is done.

Operationalising AI for bureaucratic reform

It’s worth calling out that the bureaucratic approach, with its emphasis on procedural consistency, impersonality, and objectivity, was an enormous upgrade from the arbitrary decision-making that came before it. It emerged from a modern worldview that objectivity could be institutionalised, that fairness emerges from uniformity, that human decision-making needs to be constrained, and that positive outcomes are the product of repeatable, predictable procedures. The idea that rules, not rulers, would govern was revolutionary. It shouldn’t be understated how much of a positive impact this shift has had on the world.

But process has now stopped being a support for human judgement and has instead replaced it. The very rigidity that once ensured fairness now struggles to adapt when values shift or when the world changes faster than the rules.

I’ve written this from the glass-half-full side of the table, but my optimism is grounded in experience. In my own advocacy work, AI tools have already helped me navigate bureaucratic processes more effectively. Even working just at the interface layer of the Four-Layer Model, AI has made it easier to produce the signals these systems need, interpret their responses, and identify when an institution is failing to comply with its own policy or the law.

If we look at them in civilisational terms, AI-enabled systems sit in the same lineage as past leaps in governance. We’ve moved from the arbitrary will of rulers to the codified procedures of bureaucracy. Each shift promised greater fairness, scalability, and predictability, but each brought new challenges. The move to procedural bureaucracy was a huge advance over arbitrary power, but it also locked in rigid processes that often fail to evolve alongside our values.

AI offers the possibility of a new leap: one that preserves the objectivity and consistency of procedural systems while restoring the adaptability and responsiveness they’ve lost. But replacing rigid rules with fluid algorithms carries its own dangers. If we overcorrect, we could end up with systems that are endlessly adaptable in form yet unaccountable in substance, shifting too fast for meaningful human oversight. However, if AI can collapse bureaucracy into a single reasoning layer, it becomes a new kind of moral infrastructure: one that can carry our values not as slogans, but built into the logic of the system itself.

That makes this more than a tool. It’s a potential civilisational upgrade, if we choose to design it as such. Embedding values into the logic of AI-enabled systems could give us processes that are not just faster or cheaper. They could constantly and effortlessly align the system’s actions with the values we claim to hold. Get this right, and we replace mechanical compliance with living alignment. Get it wrong, and we risk locking misalignment into something faster, more opaque, and harder to unwind than any bureaucracy in history.

Where I’m going from here

So far, I’ve hit you all with four dense posts. I needed to get it out of the way, mostly to get my own head straight, but also so that I can reference them going forward.

From here I want to start moving into demonstrating more practical ideas about how we can leverage AI for values alignment. Next week I’ll have a simple practical tool demonstration, a Terms of Service evaluator that was made as a custom GPT. This is intended as a break from the heavy reading before we look at Authorship, Articulation, Alignment, and Adaptation.

The tempo will be one wordy post followed by a practical demonstration. I’ll throw some lighter posts in as well, mostly reflections on the world as I see it. My goal from this project is to demonstrate micro-solutions to components of the problem of aligning systems to values. Hopefully people can find something of value in them.